NerdHole News

This is the Nerdhole system documentation where I consolidate the knowledge I gain from my nerdish activities.

Current projects:

- Nerdhole Small Company or Home Office On Linux or N-SCHOOL.

- Medway Little Theatre to the 21st Century

- The Beast From The South - Flagship writing project

- A stitch in time - MLT Original Play project

2026 Blog entries

2026-01-02 09:21 New Year's Resolutions

I need to pick up some more skills to make me more attractive to employers - CI/CD, Containers, perhaps even (spit) AI. If I do not improve my working environment within this year, I don't know what will happen.

Let's go!

2025 Blog Entries

2025-12-27 11:03 Gitlab and docker

So to stave off depression, I have installed Gitlab on the Nerdhole, and I now have an extra Docker server. Took me two days to get to a working Gitlab server, mostly because I forgot a step in the SSL instructions. But now, it's running. I have created a Docker host on labo106 as well. I am now watching Nana's Docker tutorial. Tomorrow, I want to do a complete deployment of a test application using Github CI/CD.

Note to self: If at some point I start to actually use Docker containers, I may have to install a Docker server on a permanent machine. I need to quickly investigate building a local repository. Should not be too difficult. I hope.

2025-12-24 13:55 Note to self

On all my ansible boxen, I need to update the ansible.community collection to the latest as I need a module in there.

ansible-galaxy collection install community.general \

-p /usr/share/ansible/collections/ansible_collections \

--upgrade

2025-10-06 08:46 Well that was interesting

So "The Rivals" is done and dusted. We got a lot of attention, sold most of our tickets, and made lots of money for MLT. This is the first time we've actually been told how much we made, which is nice. I got sick after the Thursday performance, and had to be off work on the Friday. Luckily after a good day's sleep (shivering with fever, unable to keep food down) I was well enough to sit in a chair in a warm room for a while. We did not miss a single performance. And now I'm OK.

I have also talked to my mum for the first time since her operation and she is dealing with her loss amazingly well. Born in the middle of WW2, tough as nails! Have taken to calling her "Oma Kobus".

So now, back to the next project: Getting Ben his Dutch passport. Run through the checklist, make the appointment at the embassy. Let's go!

2025-09-16 10:32 Uphill struggle

Second therapy session. It is getting clear to me what CBT is - a way to overcome the inertia that leads to hibernation and inactivity, or as the apologists have it, the sin of Sloth. Looking at this honestly, I don't think simple laziness is not my problem. If I see clearly what I need to do, and how to do it, and I can do this thing, then I will not stop until it is done. What I cannot do, and arguably shouldn't do is suffer fools gladly. I almost physically recoil and get gag reflexes when I am told to do something that is objectively stupid. And there is too much of that going round.

Oh, and Management? Security experts? Stakeholders? If your sysadmin is bent, You. Are. Screwed. It is within your best interest to keep the people you let into the high voltage accounts on your network happy, gruntled, and in a good mood. You listen to what they have to say. You let them get on with their work. You give them what they need. You stay out of their way. We are the sysadmins. We are driven, passionate people. We live to make shit work. No amount of incentive, threats, or punishment motivates us as much as a clear job and the ability to do it.

2025-09-10 12:19 Train ramblings

Need to put my train time to good use. This morning I wrote a piece of text on my situation with all the filters off. No, it isn't here, no I will never show anyone. But very cathartic it was.

2025-09-09 18:50 Apostilles!

Heroics. I did a heroic. I walked all the way from Cannon Street to a law firm at Oxford Street, and handed in Ben's birth certificate to be apostilled. We are now looking at £250 of document, so I will treasure it. I know this is not a lot of money in Lawyer's terms, but... geez. I will get the shiny documents back next Thursday and then I can book an appointment at the Dutch Embassy to get the Boy a shiny new passport. He will be able to prove he is Dutch! Mummy can take care of the British passport. I daresay it'll be a lot cheaper than this, but frankly I am paying only for my own stupidity. If I had renewed the passport before it ran out, things would have been a lot easier.

I need to call my dad.

2025-09-07 13:30 Lirael now running on Alucard

Well there you have it. This is sounding like a bad crossover fic, but I have automatically installed Alucard as a KVM server, and Lirael is now happily running on Alucard. The background for this is that I may want to replace Emerald with Alucard as the upstairs workstation (because smaller) and then re-purpose Emerald as a generic server. Emerald does not really have enough memory for running a bunch of virtual machines, and even though Algernon is of course the KVM monster, I want to be able to play with VMs on several hosts at the same time.

It is very gratifying to see that I can still build things like this when Work is doing its level best to make me feel worthless and incompetent. I think my next prtoject will be to build a CI/CD pipeline on the Nerdhole. I will have a fishtank of containers running here yet.

2025-09-05 16:17 Count your blessings

Well, today I called the Dutch embassy, and it seems that I have more time than I thought I had. I will be able to wait until Ben's next holidays to get him a passport. His Dutchness will not fall from him at the time of his coming of age. So I will have time to obtain the apostilles for the various certificates.

September has set in, and with it the new Office Presence Mandate which is a massive drain on the exchequer, and also on what is left of my mental health. I am taking steps and seeking help, so there is that. Must find a way to drain all this anger and despair from my mind. Most important in this is probably information. I really need to unfuck my home and work situation.

And then there is my mother. She had her operation and is now recovering in hospital. I haven't been able to talk to her yet. Maybe she wants to get better first. Probably a generational thing.

On a whim, I have boughtr some extra RAM for my little Dell Omniplex micro. Hope it fits. If it does, I will try to install it as my new media player and retire Emerald.

Also, I have finally bought a set of strings for the abandoned Epiphone I found on the roadside. I had to mess around with the truss rod a little, and I have raised the nut a little so the strings don't rattle anymore. It sounds great, considering. So now my guitar count is at four, and a BASS.

2025-09-01 08:36 September rain

It is now September. Which means I have to get Ben his passport within the next few weeks or he will never have one. It does focus the mind. I am also having an important doctor's appointment in a few hours. I may need a while to focus myself.

And then there is my mother, who is going through a very hard time.

Too much shit going on right now, and not enough energy to deal with it. I'll have to find some.

2025-08-28 11:52 Two more days...

I'm a bit late getting into the office today. I volunteered for something - there was something high prio happening to a few machines. So after we got the thing back up and running, we needed to do Root Cause Analysis. A post mortem if you will. So I start digging through the log files, and am not seeing anything especially usefull, so I ask the Team for help. My Team member then took over the entire job without bothering to tell me or answering any of my questions, or indeed picking up the phone.

The one thing that makes life bearable here at work is the sense of cameraderie between team members. This is not a good example. Oh well. Back to the office we go. If this ends up not counting towards my mandatory office presence because of this I shall be royally pissed of.

2025-08:27 10:26 I'm onna train

I am heading into work. I'm shelled into my home net using the wifi on the train. Now and then you do need to appreciate the technical marvels of this day and age. I can work from anywhere! That being the case... why do I have to lug myself into the office to do exactly the things I am doing from home? By travelling off-peak, at least I've made this somewhat affordable, and the time I waste in transport is now half company time, half my own. Still, I am much more productive in the Nerdhole with my own coffee, my own desk, my own music, and my own keyboard and monitor. Having to compete with others for desks is not a cheering prospect.

I swear, the first manager type who tells me it's great to see me in the office is getting the full nine yards.

I'm reinstalling my laboratory machine park, and sad to say it didn't go flawlessly. For some reason, Ansible sometimes fails to create temporary directories in Builder's home directory. No idea why. But still, the installs are now running properly.

So far.

2025-07-09 08:32 Something must be done...

I am not feeling well, sad to say. This is generally due to:

- Work

- Low, low energy levels.

- The unending streams of Stupid in the news and other places.

- Feelings of being all alone.

Don't want to go into details, but I have taken next week off, and I plan to use it to change course. Maybe I need a whole month off. Or a year. I can't go on like this, the helm has to turn. I have asked some of my friends for help, but I can't expect too much from them. I'll reach out to the professionals as well. Have to get better. I want my brain back.

2025-05-21 08:33 On the rocky road...

So I'm playing with a freshly installed Rocky Linux now. My first impression is: "Where is all the software?!" But Rocky is a bit conservative in installing and enabling software repos, which is a stylistic choice I can understand. I still have to set Paya up as a Samba server, web server, and so on. And then take it into MLT and show them what I have in mind if they give me the hardware. I hope I can. This is one place where I can really put my professional knowledge to use.

Oh well.... Time to caffeine up and start the day. Most likely by begging for permission to do my job.

2025-05-19 14:17 Rocky is back!

I have just installed Rocky Linux 9.5 on Paya. It used to have a problem where it would forget its screen, mouse, and keyboard whenever you switched away from it on the KVM. I have just switched away as before, and it is coming back with no issue. I will have to try this a few more times to be sure, but tentatively, I can welcome back Rocky into the list of viable linux distros! I will now set up a reposync job for Rocky so I can install it without getting onto the Internets. Good good.

2025-05-19 13:24 Electronics!

I have bought shiny things! Partly for myself, partly for my son. I am going to introduce him to the joys of electronics! Transistors! Resistors! Capacitors! Light Emitting Diodes! Bits of wire! I have started playing with it myself and so far have already smoked one transistor and one LED. I also have aded a Raspberry Pi Pico to the mix. It has a lot of interesting bits and bobs: passive infrared detectors, proximity sensors, beepers, and what have you. I haven't done much with the Pico, beyond hooking it up to my computer and looking at the files stored therein. My son, you have a project!

2025-05-08 15:01 How to retire old linuxes?

At the moment, my BIS playbooks will add all the ISO files to the infrastructure, but as of yet I have no way of retiring old versions. I could go for the old nuke-and-rebuild paradigm. So now I need a list of things my playbooks add to a BIS server. Either that, or simply never retire any version of Linux ever.

In other news, RHEL 10 is looming on the horizon, and I would like to start playing with it as soon as I can. It seems to be doing a lot with containers, so I may have to investigate those. OpenShift in the Nerdhole is a bit of a dark horse. I have installed it once or twice, but never fully automatic, and I have yet actually to deploy an actual application on it.

Time to ponder. My brain feels like a bit of cotton wool at the moment. I need some project to get it back in gear or I will just end up hibernating.

2025-04-10 11:18 Find something to do

I am about to become the company's leading PowerBroker expert. I have all the installation manuals and I have examples of the installation playbooks used last time. High Up has been trying to get rid of Powerbroker for ages, but they don't know what exactly it does, and in addition the cherished replacement solution has not been shown to actually, like, work. Hope this sets me up for the years until I retire.

Meanwhile, to get my creative fix, I am installing one of my lab boxes as a file server, the likes of which I shall then install at Medway Little Theatre.

Keep my brain in motion...

2025-03-23 15:53 Fixin' the Blues...

Work is not really helping my mental health at the moment, so to convince myself I still have the mojo to do heavy lifting, I've reinstalled my not-in-use workstations Alucard and (soon) Paya. Being CentOS Stream 9, the installation of various third party video codecs is proving a bit challenging. I need to figure it out, and then I need to automate it into the Workstation role.

Meanwhile, I think Alucard would be better of not being a boot/install server so I think I'll re-spot it in a while.

At the theatre, things are progressing - we now have a new sound setup based on a Polycom 12x12 matrix mixer - sound goes from anywhere to anywhere. I am going to have to design MLT a new fileserver where we can store all of our show files, configurations, and what have you. This machine will be well and truly headless.

So now Alucard is running, on to Paya.

2025-02-06 21:50 Reality check

I'm sitting in the lobby of the Indigo hotel in Brussels (for Work), sipping a Leffe blonde, typing away on Rayla. I have a connection to my main server at home and while iut's not fast enough to run an email client remotely, I can run git updates just fine.

Internetting like mad!

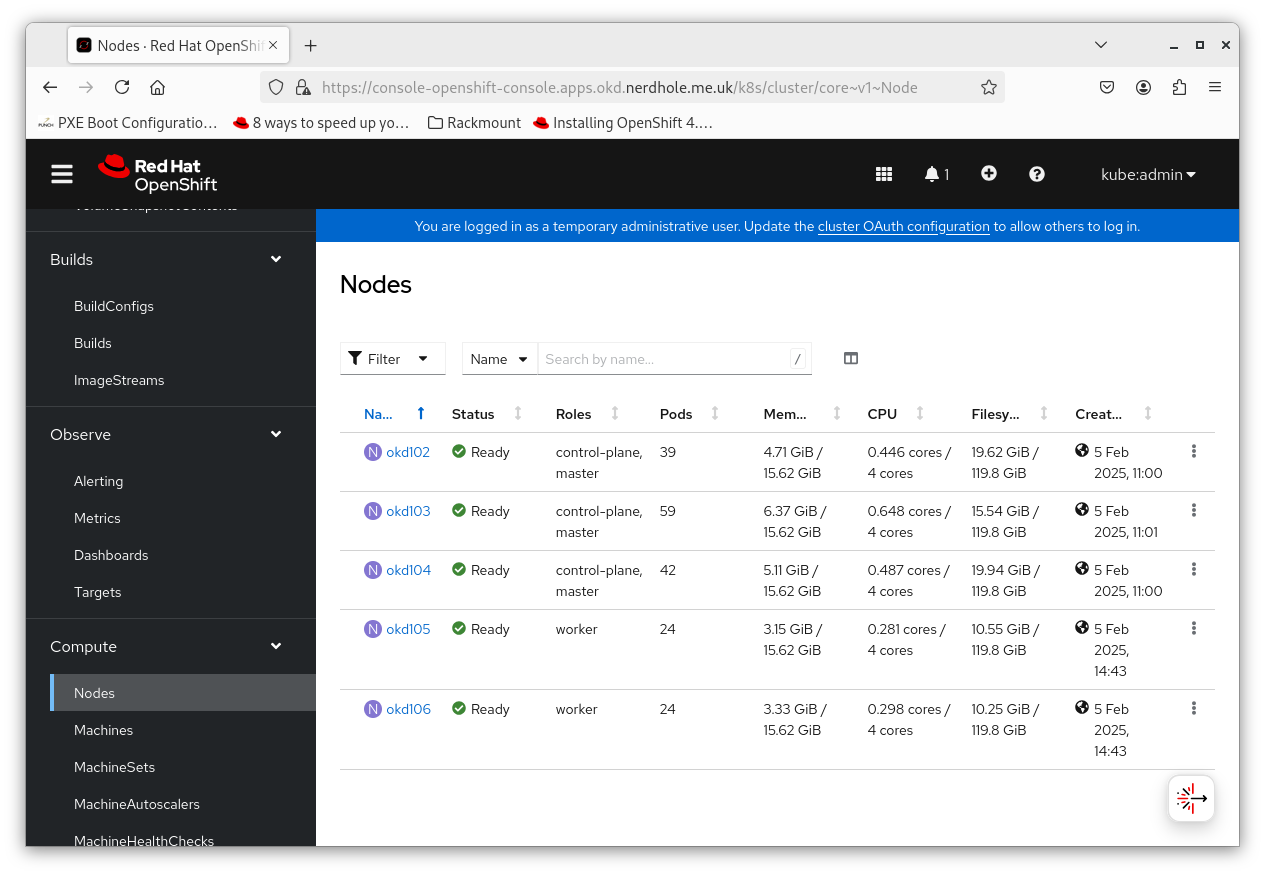

2025-02-05 16:50 I have a console!

So basically, what you need to do is just wait an hour or so between Bootstrap and Masters, and between Masters and Workers, and then the thing will come alive.

It is a bit of a performance pig, though. I was wise to stuff as much memory in Algernon as will fit. This is the performance of an empty cluster! Good thing I'm not going to put any actual applications on them or I'd need a much bigger box.

So now I have two months to grok OpenShift, and then I need to reinstall the sucker. It's DOCUMENTING TIME!!!

2025-02-05 09:30 Get the damn thing to run!

I tried reinstalling the cluster yesterday and it failed doing its usual OIpenShift thing of sitting still sulking. I think this is due to various files being from earlier installs. I am now going to remove everything to do with the cluster from everywhere and retry.

On Algernon, remove:

- /local/kvm/xml/bootstrap.ign

- /local/kvm/xml/master.ign

- /local/kvm/xml/worker.ign

From my home directory, remove: - ~/okd/

On the load balancer, remove: - /root/okd/

And now I shall run all the plays. If this works, I will add cleanup plays to all relevant playbooks.

ansible-playbook -Kk --tags openshift_host test.yml- OK.ansible-playbook -Kk --tags openshift_load_balancer test.yml- OK.ansible-playbook -Kk --tags openshift_bootstrap test.yml- Start of the installation is OK

- RHCOS install went OK.

- Cluster bootstrap not looking good...

ansible-playbook -Kk --tags openshift_masters test.yml- Start of the installation is OK

- RHCOS install is OK

- Bootstrapping still not complete

- Network load high as masters are downloading their software.

- Installation seems to be complete

Well. Several hours later, and bootstrap is complete. Install is not complete yet, but I'm kicking off the workers install anyway. Maybe it will be flagged complete when the workers are up.

ansible-playbook -Kk --tags openshift_bootstrap test.yml- Start of the installation is OK

- RHCOS install went OK.

Debugging commands:

curl -k https://localhost:6443/livez?verbosecurl -k https://localhost:6443/readyz?verbosecurl -k https://localhost:6443/healthz?verbose

On both load balancer and bootstrap, all the checks are passing. Still wait-for bootstrap-complete does not continue. Why is this? I'm assuming that this is because the masters aren't starting. So I will start those now.

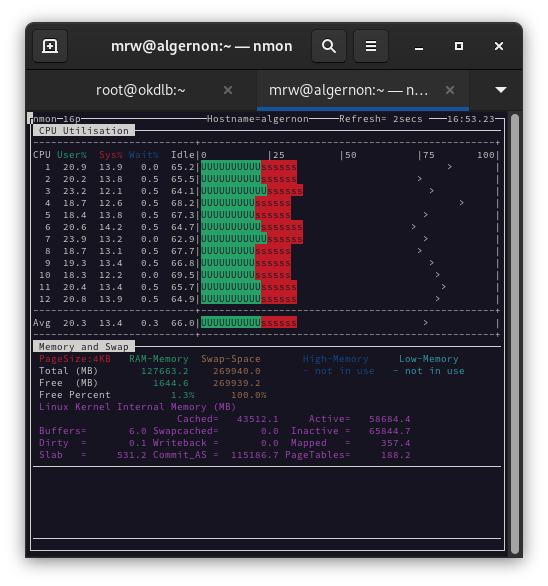

2025-02-01 09:52 I have a running cluster!

OpenShift did its thing again... It sat there for hours apparently doing nothing, and then it sudenly completed and started giving me node lists with the oc commands and everything else. So Openshift is now sitting here eating up Algernon's CPU cycles and scratching on its NVME disk. It is most unsatisfying. The last things I did were:

- Disable the firewall on the load balancer. I may just keep it like that because life is too short.

-

Wait for the bootstrap to complete with:

- openshift-install --dir ./okd/install/ wait-for bootstrap-complete

This completed in seconds, but I haven't accurately measured the time it took. I may just have to repeat the entire exercise and give it a ludicrous time-out.

- Wait for the installation to complete with:

- openshift-install --dir ./okd/install/ wait-for install-complete I was likewise late to the party with that, and so I don't know how long this is likely to take.

So the secret ingredient seems to be time. I am still not sure what exactly the hold-up is. This is an AMD Ryzen 5 running at 4GHz, 128GB of RAM and an SSD disk for all important things. Its little CPUs are now 25% busy doing nothing! I guess in the age of cloud computing we have CPU capacity to burn and efficiency is Somebody Else's Problem.

So what comes out of this?

- OpenShift is not going to be a permanent fixture in the Nerdhole. It is a study object and nothing more.

- I am going to disable the firewall on the OpenShift load balancer. I could painstakingly figure out which ports to open, but basically screw that.

- I will need to turn my ramblings and braindumps into a proper design for Openshift that I can just follow and have a running cluster afterwards.

So... On with that. As soon as the Young Man finishes his homework.

Is it Polish Beer Time? I think when I nuke and recreate my cluster, it will be.

2025-01-31 10:25 Now or never...

Last day, and not even a full day. Tonight I pick up the Young Man from his mum, and then it's no more hacking.

Out of nowhere, installs started throwing up messages like:

Get "https://localhost:6443/api?timeout=32s": tls: failed to verify certificate: x509: certificate has expired or is not yet valid: current time 2025-01-31T10:18:45Z is after 2025-01-30T15:06:21Z

The installer is clearly using old certificates. I'd like it to stop doing that please. Maybe somewhere in the plays, the certificates don't get deleted.

2025-01-30 07:30 Another day, another doesn't work...

Here we go again. Tried to reboot the bootstrap machine after the installation "Completed" but that's not led to happiness. So. How can I tell that the bootstrap server is done cooking?

I found a promising troubleshooting guideline.

Right then. Time for a start-to-finish disembuggerance.

Are my config files correct?

For "Compute," I corrected my "worker" stanza to having zero replicas. Not sure if that fixes anything, but I know you should not be specifying replicas at this stage. You add the things later. Gods, if that is it...

A YouTube video on the various networks

2025-01-29 09:09 If this is madness, there's method to it.

So where am I now? I have the auitomation to set off the installation of the bootstrap node, but I still have no way of telling if this is actually working. RHCOS helpfully shows you how to watch the log files as it goes along. So what to do next?

Redhat says that the sequence is as follows:

- Provision and reboot the bootstrap machine

- Provision and reboot the master machine

- Bootstrap the master machine

- Shut down the bootstrap machine

- Provision and reboot the worker machine

- Worker machine joins the OpenShift cluster

Which is nice, but lacking in details. So off to Google we go to get more details.

Firstly, I will switch my rhcos image to the one referenced by OpenShift itself:

openshift-install coreos print-stream-json. This will get you a URL where you can dwnload a RHCOS image. Maybe that will work better.

Hmm... It looks like the KVM dnsmasq installation isn't working properly. It does not give us fully qualified domain names. Let's see if I can't improve on that. Found promising info at the libvirt.org website. I have now added a DNS domain to the config, like so:

<domain name="{{cluster.name}}.{{cluster.basedomain}}" localOnly="no" register="no"/>

So time to rebuild the whole damn lot...

And maybe again. I may have to populate the Shiftnet name server like this:

<dns>

<txt name="example" value="example value"/>

<forwarder addr="8.8.8.8"/>

<forwarder domain='example.com' addr="8.8.4.4"/>

<forwarder domain='www.example.com'/>

<srv service='name' protocol='tcp' domain='test-domain-name' target='.'

port='1024' priority='10' weight='10'/>

<host ip='192.168.122.2'>

<hostname>myhost</hostname>

<hostname>myhostalias</hostname>

</host>

</dns>

Maybe I can use this construction to point all the OKD nodes at the main name server, which already has all the correct entries. This is just a side hustle.

Well, I just reinstalled the bootstrap machine, and it is strapping its boots at the moment and it has its fully qualified domain name. Hopefully, this is why it didn't work. If DNS is shot, most other things are too.

Well, I have found how to get oc to work on the load balancer: you need the file kubeconfig and then set an environment variable like:

export KUBECONFIG=/root/okd/install/auth/kubeconfig

Not that I'm getting much actual data, but it's a start.

When I start the master nodes, they keep shooting off message like:

GET error: Get "https://api-int.okd.nerdhole.me.uk:22623/config/master": dial tcp 10.12.2.100:22623: connect: no route to host

This port is indeed not open on the bootstrap node, so the question is why not.

2025-01-28 14:41 Back to business...

Well, OKDLB is running again and from now on I will use sudo virt-manager to manage hosts. Time to re-run my setup playbooks: ansible-playbook -Kk --tags openshift_host,openshift_load_balancer test.yml.

Got a syntax error on my shiftnet.xml definition file. How the hell did I not notice that sooner?!

The /bin/qemu-img resize command will (reasonably) fail if the size is smaller than what it already is. Turns out I missed the "G" behind the size. I don't think RHCOS will fit in 120 bytes. In fact, I will not resize the rhcos qcow file after copying it over, but when I install the OKD node. That way Ansible will not overwrite it every time.

Are we nearly there yet? I can now install the bootstrap server using the qcow file and feed it the proper ignition file, but I think I am missing something. What I've seen up to now:

- Done: Generate /root/cluster/install-config.yaml

- Done: openshift-install create ignition-configs

- Not done: openshift-install create manifests

Openshift-install requires an installation directory. For our okd cluster, the installation directory will be /root/okd/install/. Since openshift-install has the nasty habit of deleting your install-config.yaml, we will be copying that from the directory above every time we invoke it.

Right then, I think I understand this. The command openshift-install create manifests generates some intermediate files that in some cases need to be changed before the ignition files are created using openshift-install create ignition-configs. If desired, create ignition-configs can also go straight from the YAML file. What comes rolling out are the ignition files, which you feed to RHCOS' fw-cfg parameter so RHCOS can set itself up as a bootstrap node, a master node, or a worker node. I have seen this work on the bootstrap node, but the messages it spits out are far from clear and there's a lot of them. I honestly can't tell whether it's OK or not. And I still don't have a working config for the oc command.

Info from freekb on the subject is here.

We then need to transfer these ignition files to the KVM server so we can put them into our new virtual machine. They need an SELinux type of svirt_home_t or KVM won't be able to touch them.

2025-01-28 12:18 Well that was fun...

The reason I could not run my VMs turned out to be rather banale: libvirtd wasn't running despite being enabled to run at boot. Also from now on as soon as I start virt-manager, all my VMs crash. This is not the quality I like from my systems! But in order to keep going, I'll avoid running virt-manager as myself and run it as root on Algernon.

Hmm... On Emerald, libvirtd is not running either, and I can start Ariciel just fine. So the poison seems to be virt-manager exclusively. I am not pleased.

Anyway, time for lunch and then we go back to Openshift hacking.

2025-01-28 09:18 Tuesday

No social at MLT tonight, so I'll have the whole day to hack OpenShift... yay!

I need to figure out two things: First, how to bootstrap OpenShift using RHCOS. I'll hack that into the openshift_nodes role for now, but I think at a later date I'm going to add a "qcow2 copy" installation method to the BIS repertoire. Second, how to make the kubectl or oc command available to the users at large so we can do openshift stuff with it. Mostly a matter of figuring out where to get the config files from and where to put them.

But first, Sypha is long overdue a yummy update. So let's do that first.

Oh yes... Juuust run a quick yum update on Algernon. And now my virtual machines won't start. So now I am reinstalling Algernon from scratch. Hope that fixs the problem. If not, I am royally screwed. Thanks CentOS!

2025-01-27 13:50 Late start...

I procrastinated. I have turned off all the equipmenty upstairs - no TV, no Youtube. Time to get serious about this. Maybe I'll take today to write a proper design for the Nerdhole OpenShift installation. I'll start with rebuilding the load balancer. I'll also download a fresh version of the installer and Kubernetes client because I can. I find I have not included entries in my playbook to install the installer and client, so that's next I suppose.

2025-01-24 13:56 Just one weekend, and then...

Another glorious week of home network hacking is about to start. The main theme is going to be to build an OpenShift cluster on Algernon. Why this has to be so hard is beyond me, but it is. I am still trying to figure out how best to set up the various RedHat CoreOS (RHCOS) images. The first thing I tried was to copy the RHCOS image to the KVM server in QCOW2 format and feeding it an ignition file using the fw-config mechanism. This works perfectly for bare-bones RHCOS installs, but for some bizarre reason OpenShift won't have it. The second way is to provision a new machine, assign it the RHCOS install CD and somehow feed it an ignition file. Research suggests that it needs to be on a web server somewhere, but details are scarce and vague.

This if coursae leaves open the quesation of what I'll do with it once I have it. I have the premier application environment in my very own home, now deploy something on it! But first I need to see the OpenShift console. Then, I can start playing with the environment.

In other news, Rayla is really enjoying her new batteries. She can keep going for hours! Tipping over is but a minor inconvenience.

It'll be very good to be away from Work for a while. I spend most of my days being annoyed, anxious, angry, and disappointed. If I were young and employable, I'd be out of here. As it is, I'm stuck.

2025-01-11 09:35 Time for another hacking session

Right. I have the infrastructure, I have the information. What I do not yet have is a syntactically correct OpenShift install-config.yaml. Like all modern software, messages on the failures are next to useless. "I'm having trouble unmarshalling your JSON, but I won't tell you what's wrong." This is my current template:

---

apiVersion: v1

baseDomain: {{cluster.basedomain}}

metadata:

name: {{cluster.name}}

compute:

- name: worker

hyperthreading: Enabled

platform: {}

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

platform: {}

replicas: 3

networking:

networkType: OVNKubernetes

machineNetwork:

- cidr: 10.12.2.0/24

clusterNetwork:

- cidr: 10.254.0.0/16

hostPrefix: 23

serviceNetwork:

- cidr: 10.255.0.0/16

platform:

none: {}

pullSecret: '{{okd_vault.pull_secret}}'

sshKey: '{{okd_vault.ssh_pubkey}}'

hostPrefix: The subnet prefix length to assign to each individual node. For example, if hostPrefix is set to 23, then each node is assigned a /23 subnet out of the given cidr, which allows for 510 (2^(32 - 23) - 2) pod IP addresses. If you are required to provide access to nodes from an external network, configure load balancers and routers to manage the traffic.

I also have a somewhat working example that at least produced a set of ignition files:

apiVersion: v1

baseDomain: nerdhole.me.uk

metadata:

name: okd

compute:

- name: worker

hyperthreading: Enabled

replicas: 2

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

networking:

networkType: OpenShiftSDN

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

machineNetwork:

- cidr: 10.12.2.0/24

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

fips: false

pullSecret: '{{okd_vault.pull_secret}}'

sshKey: '{{okd_vault.ssh_pubkey}}'

So now to find the right combinations. I am removing the platform lines from the compute and controlPlane stanzas see if that works better. The network needs to be OVNKubernetes because openshift-install tells me so.

And still it does not work... Doing the magical cut-and-paste. See if that helps.

OK, now at least I have ignition files... Okay. is it stumbling on the cidr: in my serviceNetwork stanza?! Consistency truly is the last resource of the unimaginative. Time for a commit.

2024 Blog Entries

2024-12-31 11:57 Cows are not supported?!

Right. One step forward, two steps back. I had my infra set up to install a cluster using the method of copying a QCOW2 image to the KVM host and then booting that. Simple! Sadly this is not supported, and I need to install the cluster nodes from an ISO image. Right then... Down we load the iso.

Looking at this, it's actually not too bad. The virt-install command supports a CD as an install source and then it's just a matter of specifying the correct startup parameters.

2024-12-30 09:51 Final steps on OpenShift

I think I have my KVM environment set up to the specifications needed by OpenShift. Separate virtual network (Shiftnet) with a separate IP subnet (10.12.2.0/24), DNS entries for the Openshift nodes defined, special DNS wildcard for *.apps.okd.nerdhole.me.uk, a template for the YAML file from which to generate an ignition file, a RHCOS image on the main server to be copied out to the KVM host. Now all I need to do is to get the actual OpenShift software on there. If memory serves, that is a matter of providing an installation file, and feeding that to the bootstrap server. So let's see how we can do that.

Upwards and onwards as they say.

2024-12-27 08:50 Not quite yet...

While I have made some progress on the provisioning of the OpenShift nodes, I'm not quite there yet. I now know how to set up an OpenShift node and feed it an ignition file, so I'll have to Ansible that up. And then I can finally get on with the actual OpenShift installation. If Work leaves me a moment, I'll see about inserting that into the playbooks.

2024-12-25 18:53 Well well...

Okay, so I have made some progress on the OpenShift front, but I hit a snag. The Ansible playbooks provided by my benefactor assume that you install the thing with PXE - they have you download a kernel, initramfs, and rootfs, and take it from there. So what I have up to now is a KVM config for Shiftnet, with the following features:

- A separate NAT network named shiftnet, that seems to be working well.

- A running load balancer

okdlbwith one Ethernet in Frontnet and another in Shiftnet. Waiting to have the OC client installed on it. - Some commands to build a fresh machine in KVM.

I have also built a bootstrap node that runs Fedora CoreOS, but due to this and that, it won't let me in. Still it has its proper IP addresses so that is something.

So now I need to get the setup instructions from some other place.

2024-12-23 14:35 OpenShift or bust

"So do you have any plans for Christmas?"

Why yes, yes I do. I want my openshift cluster. I will use the latest instructions I found, renew my subscriptions with Redhat is I need to, and get the damn thing running on Algernon. I have two days. I am going full on Autistic mode, and not do anything else those 48 hours.

So that's what I'm planning.

2024-12-16 09:03 And it's a wrap...

It's a Wonderful Life just went through its last performance this Saturday. This is the first time I actually had some doubts as to whether we'd pull it off, but we did! Sterling effort by all concerned. I am now attempting to burn the show onto a DVD. I've had to cycle through a few machines and pieces of software to get this to happen. Strangely, I was able to squeeze a 11GB file onto a 4.7GB DVD. Must be some form of compression going on. And I've now replaced the fuse on my BluRay player so I won't have to miss Alita Battle Angle after all. Will put the DVD players back in their original setup soon.

Work wise, we're in the last few weeks of the year. We have a change freeze, lots of us are on holidays, but I'm working through. I hope I'll be able to fix a few things to the working environment and come to terms with more obstacles put in our way by the unsophisticated. Joy. If I don't see you, Merry Christmas!

2024-12-12 12:17 I bought a thing

Well, two things actually. One is a tiny bit of string that goes between Rayla's Mini DisplayPort and an HDMI monitor. This will enable me to hook her up to Flippin' Big Monitors or projectors and run presentations. The other thing is a tiny 12-channel DMX512 controller that I intend to use for MLT's house lights. I've been programming scenes on the Big Desk while people were in the building, and surprisingly, they want to see where they are going. And what happens then is that you forget to turn the house lights off and they get added to the scene you are working on. So. Separate set of Slidey Things, and that will not happen anymore.

On a mental note: I just subscribed to Bread on Penguins on Youtube, and she makes content on mid-to entry level Linux. And puts in the occasional piece of wisdom having to do with hacking your mind. I have no idea why, but she's inspired me to claw out of my current rather dismal mental state by reminding me that I have control over my mental processes! Amazing no? So. From now on, I'll be counting blessings, concentrating on positive, and try to suppress my ongoing anger at the world at large. Let's see how that works out. Just writing it down turns it into a commitment of sorts, even if nobody reads it.

2024-12-04 11:12 Well then... some videos will run!

I just reinstalled Alucard and the Workstation role still chokes on the installation of RPM Fusion's video codecs. But I have at least some progress. The poison package is compat-ffmpeg4, and if you simply do not install that, the process completes. However, some codecs do not get installed. But Youtube now works, and certain educational sites now work as well. So I can wait for the RPM Fusion people to recompile their offerings against libav 7 rather than 6.

2024-11-25 08:56 Rsync is rsyncing

I just downloaded the latest and greatest from Centos, and then of course I had to back that up to Algernon. The new disk is doing what it should, and the rsync process can now be started unattended from a Cron job or instead from a playbook. Don't exactly know which I'll choose. So the first half of the backup facilities are done, documented and automated.

So what next? I think I may have to start putting an actual user interface on NSCHOOL - meaning commands to be run on clients and servers. I don't really want to go on a trek through my documentation every time I update my hosts.

Oh, and I have to make sure that Thunderbird is at the very same level everywhere. Because it throws a strop if you access the mail using anything older than the latest. So that's the next job I guess.

Looking at my collection of hardware... Where do I want my machines to live? I think it would ber more aesthetically pleasing to have a stack of Optiplexes sitting under my TV. But that would mean I have three PCs attached to my TV, and a DVD player. My TV only has three HDMI connections. Not that I would use them that often.

I think I am going to put Paya back into her role as Little Server. So I'd have to put her somewhere I can have her on all the time. Decisions, decisions. Will put that off till later. Meanwhile I have theatricals to consider. Premiere night for "It's A Wonderful Life" is closer than we think.

Ugh. Repos are not being cooperative. We have a version clash between RPM Fusion and EPEL. This is explained in a Reddit post here. So we don't upgrade any workstations just now...

2024-11-22 06:24 Theatrics

The production of "It's a wonderful life" is gearing up. I'm doing the lights, and as it turns out some of the sound. Digging through the script as I normally do, I find more sound cues than light cues. The director also wantds images projected on the back of the stage, which Chris is doing. We have a Fuck-off Big Projector for the purpose that has been securely hung up above the stage. The only problem is that it casts a shadow when I turn on the big floods. So let's not do that then. I have other lights. Today is my last day going into the office for this month. I have one day left on my weekly seasons ticket, so maybe I'll take the Boy out to London. Been wondering what he wants for Christmas and it turns out to be... video games. No surprise there. I'll have to think about that, there may be more useful presents.

Meanwhile, the Nerdhole rsync facilities are designed, and now I have only to build them. I'm expecting some trouble with SELinux. But then I always do. It'll be good to have. Having just one copy of my important stuff on one disk worries me. Though of course I do have git repos all over the place.

After last month's strop, Rayla is now behaving properly. The new battetries are great. The main tank has never run empty yet, so I don't have to lug around a charger. Just plug her in when I get home. Nice. She's turning into quite a capable machine.

And as a periodic note to self: Get OpenShift running on Algernon while it can still run it.

2024-11-20 06:29 I'm onna train...

It's that week of the month again, where Work want me to be in the office. So having outfitted Rayla with a fresh pair of batteries, I'm using the time to update my documentation. Needless to say I don't do that on the work laptop so I am now lugging around two laptops! Went to Theatre yesterday and hooked up Rayla to the DMX network. Tried out the QLC+ Sequence feature. I can now put waves of colour all over the stage. I'm still not doing any real stage plays with this, but next time I have to busk a music performance, I may well give it a go.

Now, on with the backup server design. Thinking on rsync, the client will have to run as root in order to back up every file on the client. How I start this, I'm not sure yet. I see rsync as more of a sysadmin facility, whereas Bacula users need to back up and restore files without gaining root access. Sysadmins can put up with having to kick of a chmod or chown or two. Office users don't want to.

2024-11-17 10:26 Get my back up

I am really going to have to get the backup system running on Algernon and its big 12TB disk. Twelve commercial terabytes, that is. Whoever came up with Kibibytes? While I figure out Bacula, this will be done with a simple rsync. The main problem is moving things from one machine to the other. How to do that in a way that a small company would find cryptographically sound?

Secure shell with public and private keys is of course the first answer to that. However, we may have to prepare for the event that one of these keys will fall into the wrong hands. Who will be initiating that contact? From the user perspective, it is most convenient to have the client initiate the backups. To be able to back up literally everything, the backup needs to run as root. It needs to be started as a local user.

So. We need a script (say, /usr/local/bin/rsync-backup) on every backup client that starts up an rsync to the backup server.

So. Time to generate some SSH keys. Root at Sypha will use a passwordless ed25519 key. It is specifically meant for unattended automated processes, so putting a password on it is not desirable. Then, we need to make sure that this key only gets used for rsync. Needless to say, this will still let root@sypha read any file from the target host,

2024-11-13 09:00 Proof of Concept

So yesterday I took my little USB-to-DMX box to the Theatre, hooked it up to the Universe, and found I could actually control the lights from Rayla using QLC+. This is progress. It means I could run an entire show all from Linux Mint Debian Edition. Now all I need to do is familiarise myself more with QLC+, and then I can cook up some stuff at home for use in theatre.

2024-11-10 08:41 Minecraft and Nerdhole-craft

So it's back to work tomorrow. My restorative week off is over. Sitting here watching my son watcing Minecraft videos. Ye gods. People are building entire cathedrals in there! So today we chill, then we lunch, then we go out for an Activity. Must have an Activity. On a related note, the phrase "Without further Adieu" is really starting to grind my gears.

I'm going to have to take stock of my Nerdcrafting here and see where I need to add. My North Star is: OpenShift. I need to get the software again, re-subscribe to the appropriate accounts, and turn Algernon into an Openshift cluster.

2024-11-08 10:21 Final day...

This is the last day of unfettered Linux hacking. I have actually achieved quite a lot. Sypha is now the unchallenged boss of the network, and Paya is taking a well deserved rest. All my workstations (Algernon, Alucard, Paya) are now at the latest stable release of CentOS, and lots of small things have improved about the environment. Remote X displays are faster for one. I can manually install several different flavours of Linux including Linux Mint Debian Edition. At some point I want to add Debian itself to the mix as well. I am writing this on Rayla, using both of her shiny new batteries, in a coffee shop in Chatham, watching the people walk by. I am much restored after the last months of stress. Monday will be my next day at work, but let's not think about that now.

So. Now comes the time to go over my documentation and let the world know just how clever I've been.

I also need to split up the nschool role into its constituent parts so I can, for instance, reconfigure my DNS/DHCP servers. Maybe when I get home.

2024-11-07 11:23 Automatic vdisks are a go

As expected, the precise syntax of a dynamic results loop variable took a while to figure out, but I have it now. So now the storage role will create the new storage volumes on the KVM host and then attach them to the host. The filename is: labo100-datavg-vdc.qcow2. Short hostname, volume group name, disk name. I have to admit that the role is not as idempotent as could be desired, but I want to get on with the rest of the installation procedure. So. Keep this as a todo item. It's almost lunch time, so I think Pie and Mash are in order.

ETA: And now with the storage good enough to be getting on with, I will be constructing my Rebuild playbook that should be able to rebuild anything except maybe the main server.

2024-11-07 09:23 Last building day

Today is the last day I have genuinely free from 09:00 till 18:00. I need to add a few more functions:

- Make the storage role add virtual data disks to a virtual machine.

- Create the Rebuild.yml and Reconfigure.yml playbooks where I can put my app roles.

- Add a wrapper script nschool for them so I can explain things to the user about which password to use.

To do nested loops in Ansible is (surprise) a bit of a nightmare, but a Redittor named onefst250r has a way of flattening nested data into a single list of items. For example:

- name: debug

debug:

msg: "{{ item.source }} {{ item.dest }} {{ item.port }}"

vars:

csv_data:

- [ "sourcehost1", "destinationhost1", "22 3000 8096" ]

- [ "sourcehost1", "destinationhost2", "22 3000" ]

- [ "sourcehost2", "destinationhost1", "22 80 443" ]

loop: >-

{%- set results = [] -%}

{%- for item in csv_data -%}

{%- for port in item[2].split(' ') -%}

{%- set _ = results.append({

"source": item[0],

"dest": item[1],

"port": port

}) %}

{%- endfor -%}

{%- endfor -%}

{{ results }}

I'm sure I can adapt this technique to my needs.

2024-11-06 09:00 Partial success.

Yesterday, the installation of a VM went reasonably smooth. I am using the same pxeinstall role for putting the OS on both physical and virtual machines. I am generating XML files for defining the VMs on the KVM hosts, and of course I forgot to put in a few variables. I'll check out the template one more time. There was only one problem that was vaguely perplexing. A VM stores its non volatile RAM in a file, and since I had not put the hostname in that file, I could only start one VM at a time. The only reason that file even interests me is that it contains the setting for safe boot, which for the nonce I want off until I find a way to support signed boot images.

Meanwhile Rayla is enjoying her new battery. I briefly feared I'd already broken it by completely discharging it for the statistics, but it's charging now. I hope it, with the other new battery, will extend Rayla's stamina on the road. I intend to take her to a cafe when time comes to document all that I have done.

So today will mostly be spent debugging the kvmguest role. When Emerald can reliably install a VM, I will reinstall the Beast of Algernon with KVM and get all my labo VMs up and running again. And then if I have time left, I will put in the Reinstall/Reconfigure playbooks.

But first... COFFEE!!!

ETA: Ansible and the default sftp server don't like each other. Set transfer_method to true in ansible.cfg to make Ansible use scp only and stop this silliness.

2024-11-05 10:23 And now we go virtual!

Right. Time for the big part of this week - restoring the Nerdhole's ability to auto-install virtual machine hosts and guests. We start with Emerald, and then we do Algernon.

2024-11-05 08:39 And so begins the second day

I've made some progress. The boot/install server role will now use the general storage and filesystems roles. I have tested it on Alucard, and while I can't test it of course, all the exports seem to be there. The "Restart services" bit is still giving me some trouble though, so will have to look into that. Change them from handlers to simple tasks, most likely. They didn't get triggered. ETA:* I've rebuilt Alucard now, and am running the bis role on him with the ISO images already in place. So now, the appropriate handlers should be triggered. ETATA:** All except the web server. Have added that now. Do I really want to work on the uninstall and purge options now?

BIS is important because it is an essential part of my recovery strategy should Sypha (gods forbid) catastrophically fail.

So today I will look into why this might be the case. And then I'll start on the KVM host and KVM guest roles, which is the whole point of this exercise. I want to have my lab up and running again.

All in all, this should be an improvement. Upgrading to CentOS 9 has already benefited me. The remote X sessions are a lot faster now, videos have improved. The idfea is of course that once I finish (haha) this, I will be able to apply the playbooks and knowledge gained to the Medway Little Theatre. Which is an entire new round of design and building.

I also need to find a way to initially transport installation media to the boot/install server. If they are not there, then the ISO mounts and the NFS exports will fail and I have to redo them. The ISO images at the moment total about 70GB of storage. Should fit on a reasonably large USB key. It should easily fit on a reasonably sized laptop. Maybe I should build a laptop based environment bootstrap environment. I'm not risking Rayla at the moment, but I could definitely try to press Yang into that kind of service. She's got a 1TB SSD if memory serves.

2024-11-04 08:47 Monday monday

Yesterday I managed to get a "filesystems" role running. It is meant to be a utility for other roles that want separate volumes for their data. It uses my standard application variables layout and creates all the file systems. The volume groups will be created nby a relative, named "storage" which I will be developing shortly.

Now that I am depending on Sypha for my daily drivings, I can't reinstall her willy-nilly, so I may have to use either Alucard or Paya as a test main server. Well, I have coffee, I have music, I have nothing keeping me back. Upwards and onwards!

2024-11-03 Holidays!

I have thought on this and on reflection I don't like an hourly job to update the NSCHOOL website. Most of the time it won't be necessary and it should simply be triggered from the fact I am pushing an update to Sypha. I will have to put something in the sudoers file on Sypha that will allow members of sysadm to update. Will have a coffee and see how to implement this.

Let the N-SCHOOLing begin!

2024-11-02 15:29 Am I procrastinating?

I have now added a Git Log report to the NSCHOOL website. I have also put in a script called nschool_refresh that will git-pull the nschool repository from the local git directory (this adds the requirement for the main server to also host a git repo). I intend to run this from cron.hourly so that my info will always be up to date. I will also run sync_repos.sh every week to download all the fixes. Having done this, I'll also need to run a yum update everywhere afterwards.

No more excuses now! Get busy on the playbooks!

2024-11-02 14:44 Paya is now upgraded.

Since she wasn't doing anything anymore, I have taken down Paya, and re-installed her as a workstation as was her original purpose. She has served me well as an emergency main server on CentOS 8. I think I will be using her henceforth as a web server and SSH server for the Great Outdoors, but for now, she can have a rest.

I am lurking on the Work chat groups, and maybe I'll be able to start my validations early. But maybe not.

So this is a bit of a confused start to my week of rest, relaxation, and work. I need to do thye following this week:

- Reorganise my installation playbooks into the provisioning-os install-authentication-configuration workflow.

- Build the provisioning playbook for KVM guests. (KVM may be playing a bigger role in my future so... yay?)

- Rebuild the WoW KVM guests and the labo hosts.

- Organise at least an rsync backup unto Algernon.

- Think of how to put my fictions onto the website.

So... Busy. Also, I need to go into Theatre tonight and film the Youth Extravaganza there. Let's see how much of this I can get done.

2024-10-31 15:43 A week of rest...

I'm taking next week off. I've dealt with Covid, all kinds of corporate unrecovery, and at times I was too tired to move my brain from one thought to the next. I want to spend at least a week operating a Linux network with a strict No Stupid Policy. At the end of which I hope to have all my machines, virtual or otherwise, up and running again using the current version of CentOS. I also want to have a proper backup running on Algernon using Bacula. ANd get some proper sleep. These 5am starts are taking it out of me, as is the whole day sitting still and quiet in an open plan office.

I'm currently reading through my documentation, and adjusting it to new insights. I don't trust myself yet with the actual playbooks and config files - that will come on Monday.

2024-10-30 06:27 Bob the builder

Just to collect some thoughts, what do I want to do with my builder account? Once a machine is in the IPA environment, you don't need it anymore. My own account can become root as necessary. But maybe not all machines will be IPA clients - laptops spring to mind.

For normal experimental use, I want to be using my personal account. So installing a web server, building a cluster, making a file server - that should all be done using the sysadmin accounts. The watershed for that is (for IPA clients) after the IPA configuration and for laptops and for non-IPA machines once the end user account is created. So I may need another layer in my system provisioning system: Authentication.

2024-10-28 07:38 Reorganisation of the plays

I've been thinking about how to organise the installation of my machines, both physical and virtual, and I have come to the conclusion that I need to reorganise. I need to order my playbooks into three stages:

- Provisioning - building a machine.

- For a physical box, that means hooking the thing up, and configuring the BIOS to boot in UEFI mode, normally from the hard drive and when you mash F12 at boot time, from PXE.

- For a KVM box, it means to delete (undefine) the entire machine including its disks, then recreating it from its XML definition file.

- Once this is done, the machine can be installed the same way whether it is physical or virtual.

- OS Install - Booting installation media and setting up the OS.

- Manually - Delete any grub.cfg files so that when booted from the network, it will present the menu of Linux flavours.

- Automatically - Generate a grub.cfg file for the machine that specifies a kickstart file that enters the configuration for you and starts the OS installation. Hands-off. This is for custom jobs or pets.

- Once this is done, the machine has a builder account that we can ssh into, from which we can configure the machine further.

- Configuration - Now that we have a known OS image on the machine, we can configure it further. We have a choice of the following:

- IPA client - Set up the authentication from the main server. It also configures home directories and data directories auto-mounted through NFS.

- Workstation - Puts on the Gnome desktop and a collection of useful office applications. It also puts a nice login picture on the machine if there is one.

- KVM Host - Prepares the machine for hosting kernel virtual machines.

- Backup host - Runs a backup server and also provides storage for rsyncs of data that we need no incremental backups for.

- Backup client - Useful for the main server and any laptops we may have.

I think I will need a "Machine hardware" variable for my hosts, with the following options:

- pc - For the workstations and laptops

- kvm - For KVM hosted virtual machines.

- lpar - For AIX machines, if I choose to resurrect my Power5 box.

2024-10-21 08:42 Rayla's back...

I have re-installed Rayla using Linux Mint Debian Edition this time. Look ma! I'm running debian! So now I get to reinstall all those little aps I've been accumulating. The big ones (QLC, Openshot, Linux Show Player and the like) are back, but Git wasn't so I installed that this morning. Needless to say, I am not impressed. But I like Cinnamon enough to keep using it for now. Also the mainstream applications are better with LMDE than with Centos, which is more aimed at the corporate world.

So hey ho, off we go.

2024-10-20 11:17 Well crap!

Linux Mint disappointed me yesterday by bricking Rayla after a kernel update. Apparently the new and improved version doesn't understand something about my Thinlpad T450's BIOS. It also stopped working with my PXE boot Mint version. So I have now added Linux Mint Debian Edition to the Nerdhole Supported Distros. That shits itself on boot, so I may have to tweak the linuxefi lines.

Damn it! I was enjoying Linux Mint, but if this sort of thing keeps happening I may have to start looking for another distro.

2024-10-17 21:23 KVM Clients next

As it turns out, KVM is a breeze to install. The most difficult part is to move the KVM host's IP address from the Ethernet intertface to its Bridge interface, and the script I wrote to do that still works. All I need is to re-add the extra swap space. But I'll do that when I find I need it.

Next are the KVM Clients. These may be slightly more challenging, because when you create the things with the Virt-manager, it turns on safe boot by default, and that does not seem to work well with shimx86. I think I have found where in the XML to change that, so I'll try that next.

2024-10-13 13:23 On to the next...

Well, I think the "Workstation" role is done for now. Next comes a complicated one: the KVM host. I'll have to copy that one over from Paya and adapt it to NSCHOOL sensibilities.

2024-10-13 11:06 Quality of life

I am now using Alucard to do most of my work. Just to get used to the environment. I am becoming a little disenchanted with CentOS as a working environment I have to say. On Rayla, I've been using Cinnamon and that feels a lot more eager to please for want of a better term. There is no more "Places" menu in Gnome. Also Rhythmbox has disappeared and I am now using Audacious to listen to Mary and Eva.

I have "Solved" my certificate problem by ignoring it for now. At some point, I will have to solve the whole certificate situation because it is a major pain in the proverbials and won't look good if I ever install someone else's network. But be that as it may... onwards and upwards.

The only app I actually use that isn't installed yet is Skype. Microsoft seem to have changd its packaging from RPM to something not-RPM called Snap. Snap requires me to install support. I'll do it by hand for now and see where it leads me.

ETA: Well, it seems Skype is joining Discord in being a browser-only application. Snaps do not work with my setup unless I allow root to access NFS-based file systems. It also doesn't seem to work well with SELinux, which I am also not turning off. I need to put in a call with my parents on Skype. Give them the news that I'm doing Covid again... Better shave first.

2024-10-12 21:21 Certifiably insane...

I'm having trouble with my certificates. Again. When I try to access Sypha's website from Alucard, I get the error SEC_ERROR_REUSED_ISSUER_AND_SERIAL. This is because both Paya and Sypha claim to be the owner of www.nerdhole.me.uk. I have in fact tried several times to remove the nerdhole certificate from my Firefox, but like a fkn vampire it comes back every time! I my just have to nuke my entire firefox configuration and start all over again. Builder can actually access Sypha's www.

However, there, I have a different problem. IPA has not included www.nerdhole.me.uk in its list of host that the nerdhole certificate applies to. So it says "I'm not gonna trust this one." I really need to get a better grip on how these damn certificates actually work. I could probably get this to work, but I( want to do this properly. Part of the problem of course is that I'm using the same box for both IPA and WWW, which is not advisable as soon as you grow past a single server. Keep your IPA in its tight little corner and use a different box or VM for your web services.

Imma sleep on it. Mr. Tesco just brought me four cans of Doom Bar.

Oh. I tested Wednesday, and I have the coofs! I hope there will be no stripe on the tester come Monday or I am not going to Krakow.

2024-10-12 13:48 Getting complicated quickly...

I'm having some trouble with users. I want my reinstall playbooks to be runnable by any sysadmin. However, at the time of PXE install, my account isn't on the target system yet so I need to use the builder account, with its ability to become root. There are two problems I ran into:

- The

wait_formodule is shite. - The target host gets a shiny new SSH key on each reinstall.

The wait_for module does weird things with sudo. I use the wait_for module to determine that the target (physical) host is down, and then that it has started its Anaconda installer by testing for port 111 open on the target. Once it is reinstalling, I can go ahead and delete all of the target's SSH keys from both IPA using the appropriate module and from everybody's known_hosts. Then I wait until the machine shows signs of life on port 22, and then I run an ssh-keyscan on it. So... Who needs that key? Where it ends up, is in root's known hosts, where it is no use at all. Root will in due course put it in a central location managed by IPA, where everybody can see it. The only user who needs it is the system administrator running the playbook, until such time as it has an IPA record. IPA's feature of managing SSH keys is brilliant. Solves a lot of problems. No more WARNINGS THAT SOMEONE MAY BE DOING SOMETHING NASTY OMG!!!

Going to have a cup of coffee and think about the problem.

Right. The Solution That Works after many tries:

- name: "Wait 10 minutes for user to restart the system from the network"

local_action:

module: ansible.builtin.wait_for

host: "{{inventory_hostname}}"

port: 22

timeout: 600

state: stopped

msg: "Host {{inventory_hostname}} is still running SSH!"

become: no

The "become: no" clause will stop Ansible from using sudo to switch to root and getting confused about the sudo password prompts. This is a local action, so I am almost sure that it won't try to ssh into localhost for this. Once ssh is gone, a similar stanza will wait until Anaconda on the target opens up port 111, and then we will know the installation is underway and we can relinquish control to the calling process, which will remove the host from IPA and the calling user's known_hosts file. So that works now, all that remains is to properly document it because it is not at all obvious.

2024-10-08 11:02 Well that seems to have worked

Adding the CRB repo to the Nerdhole seems to have done the trick. A yum install of openshot now shows all green and we will be able to edit videos in the Nerdhole. Woot. As a side benefit I now also have ffmpeg installed, which is good for sites bearing videos. A lot of apps like Skype use these libraries, so that should be easier to install as well.

2024-10-07 16:34 It's been that long...

Well folks, I have not been doing much about NSCHOOL for almost a month, but that is going to change! I am done with Lion in Winter thank COBOL and Algol, so now I have my evenings free for reinstalling Alucard over and over again.

I tried to install Openshot (a video editor), and it refused with a lot of failed dependencies such as libffmpeg and the like. Turns out these are in a repository named CRB which is roughly the same idea as EPEL, but different. Don't know, don't care. So I have just finished syncing that repo from the Internet, and now it is time to reinstall Alucard yet again and see if I can install cool applications with the CRB repo added. The idea is still that I can install the entire machine from my local resources.

In other news I have bought and installed into Algernon a big fat twelve-terabyte disk for the express purpose of storing backups from all the other machines. I will consider adding an external USB disk for offsite storage later. I think I'll be using a combination of direct (rsync) copies of the local files for things I download off the Internet, and Bacula for things where I might want to store multiple versions of the same files and directories. In addition, I'll create a big storage tank for things like video files downloaded off other people's cameras and the like. You need some place to store them.

I think I'll go and find something to cook and eat it now.

2024-09-11 22:27 Chickens and eggs

Just got home from another rehearsal. I managed to get all the DMX information for an Elumen8 Virtuoso 600 RGBAL profile spot into QLC Plus. I can't test it of course, because I have no little bit between Rayla and the MLT DMX universe. I think I'll buy one. The provisional DMX cable I ran to get a light in the window has developed a fault, and breaks the whole rest of the universe. Need to fix that.

Also there is a problem with Project Belnades: chicken and egg problem. I always intended the builder user to be a temporary fixture, to be removed once the authentication services are up and running. So now I have IPA, I have my boot/install server, and now I want to install a client. That client also has a local builder user that I need to install the IPA client. For reasons of SELinux, I cannot use public-key authentication for that, and the rest of the bare metal install runs under my own account, with a well-aimed become stanza.

I am not known on the client before IPA is up and running. I am known on the BIS server, so I can prepare all the installation files there as root. And while builder is still there now, I need to remove that from Sypha before long, so I can't use builder to run the install from.

Options:

- Keep builder on as a permanent fixture. I can run the bare metal install as Builder, with the well-known password. Which is not what I want, but I can justify it if I use builder only to build new machines.

- Install IPA from Kickstart instead of Ansible so all the users will be there right out of the gate. But laptops don't get IPA, so I would have to make a separate kickstart file for the laptops. Don't want to.

- Fix the SELinux problem with Builder's home directory so I can use private key authentication to ssh to the client from my own account to builder. I'd need builder's private key in my SSH keyring.

What do? Time for a nap.

2024-09-04 10:55 Pulp!

I'm just putting the name here so I don't forget it. Pulp is an application that manages local copies of remote mirrors. A bit of a shell round reposync. Since I intend to have several machines on various versions of Linux, this may be of interest.

2024-09-02 17:51 Subscription manager?!

Apparently RedHat, our upstream benefactor to CentOS, is installing a subscription manager. This gives us errors. So put this on the todo list:

In the file: /etc/dnf/plugins/subscription-manager.conf

Change the line enabled=1 to enabled=0. Really people? I use CentOS specifically so I don't need a RedHat subscription. Oh well. One stanza in pxeinstall's tasks/main.yml should fix it.

2024-08-30 08:29 So bloody tired

Why am I bothering building this entire environment? Isn't a simple PC with a web browser enough for me? Why am I doing this? The answer is simple. Therapy. I geek Linux professionally in some indstitution in London. Or at least I try to. In fact I spend most of my time filling out forms, where I have to beg for access to the systems I need and explain to a variety of people what it is I am planning to do.

This place is my sanctuary. I have full control over it. I can design and build it exactly to my own specifications. I can prove to myself that I still can wrangle Linux, because I get SFA opportunity to do it at work. If anyone else reads it, if anyone else benefits from my ramblings, that is a bonus. Gravy. A point of light in what is rapidly becoming an unending whirlwind of crap. Right then. got this off my chest, time for more meetings.

2024-08-28 11:04 Lunch break at work

Rather than take a potentially expensive walk outside, I think I'll work a little on my NSCHOOL documentation. Take the opportunity to do some genuine Linux work. I'm afraid I'm on the downswing again. I really really need to get some result, any result. On a positive note, I can now auto-install a minimal image of CentOS Stream 9 using Kickstart. All the important things are in variables. Now all I need to do is port my IPA Client and Workstation role over from Paya and then I'll be able to build a working Linux office environment fully automated. Even the main server is now installed with a playbook. Maybe I'll re-spot Sypha one more time to verify that everything does in fact work.

I can now install seven different flavours of Linux from my Linux server!

I need to remind myself occasionally that I do have my moments. With all the crap at work, it's easy to forget that I am in fact a capable Linux admin. Soon, I will be able to auto-install whole virtual machine environments from my main server, and not only that, I know how to set one up from scratch. I can build an entire office out of Linux machines! And this will start with the Nerdhole itself.

2024-08-18 10:13 feels like the final push

Well then. I have solved the login problem, and I have downloaded the CentOS Stream 9 repos to Sypha. The CentOS 8 Stream repos seem to have gone a bit screwy with alternate "Vault" URLs. I'll figure those out later. I am now writing this on Sypha itself, under my own username. So I need to add a few stanzas to put the correct SELinux file context on /local/nschool/bis/repos so that Apache can have it. Then I need to figure out the proper URLs for the NerdHole CentOS repos and configure those into Yum on the clients. Sypha will never use her own repositories, but download straight from the Internet so she'll be the most up-to-date machine. Will need a little thought on how to set up a regular job for downloading updates for the rest of the Nerdhole.

So. Let's get on with it.

Hmm... I keep getting "Unreachable" errors for Sypha when comingh from Sypha half way through the playbooks. This is disconcerting.

2024-08-16 17:22 Good ideas?

I would like to add my many stories to the Nerdhole website under a separate heading. So I'll have to produce a prul script to convert tales to Markdown. I tried inserting HTML and it did not go well.

Mkdocs is annoying. It tends to get broken by updates to the system and then I have to reinstall it with force flags. Loth as I am to do it, I think I'll need to put it in a Virtual Environment to keep it from breaking. Because Pythonistas are idiots who think backwards compatibility is for wimps.

2024-08-16 10:40 Almost weekend

This weekend I will divide my time between doing Tech at MLT and finishing up the user authentication and logins. I will also need to replicate the CentOS Stream 9.x repos to Sypha. Which will take a while, so I'll start it up on the Saturday, go sweat away at the theatre and hopefully find a complete copy of the repos when I get back. Then I need to copy over and redesign the Kickstart file. I'll have one kickstart file template for each version of Linux I support. Those kickstart files will be available on http://sypha.nerdhole.me.uk/bis/ks/hostname.nerdhole.me.uk.ks. When I can reinstall Alucard without fail using Sypha's resources, then I can start copying over the data from Paya to Sypha and start using it.

For now, Paya is the only machine to run Thunderbird. I did that back in the day because version differences in the Thunderbird client caused problems. I will have to keep all my Thunderbirds on the same level to avoid stuff like this.

Also, I am ditching the Zoom and Discord clients on Linux. They are genuinely more trouble than they're worth. I spend more time updating Discord than actually using it. Someone please teach these people the joys of RPMs and software that doesn't mainly live in the users' home directories thank you very much!

On with the show!

2024-08-14 16:13 Found it!

Well, I think I have found the login problem. I was creating the users with the same UID as they now have on Paya. However, when IPA server gets installed, it picks a random 20000 size range for its users, and if you define users outside of that range, you can't log in.

So I'll have to use a flag on ipa-server-install --idstart=IDSTART to set that range to what it is on Paya. And re-install Sypha again. See if it works now.

2024-08-14 11:58 Ippa!

So I didn't get round to doing my FreeIPA user creation debugging. It's a bit weird. When I use the Ansible IPA module to create a user, it gets created. You can su to it from root, but as soon as a password gets involved, it worketh not. I create another usrer from the WebGUI, and it works fine. So the pythonistic snake fuckers have messed up? Am I missing something?

I think I'll try to create a new user using the IPA command and see how that fares.

2024-07-30 08:25 Tired, tired...

Why is it such a bloody pain to get anything done at work?

Anyway, I need to debug my FreeIPA installation. As far as I can tell, it's the Kerberos part.

2024-07-19 07:11 Re-work of the "os" data structure

I made a little design mistake in the bis.os data structure. It is currently a list, like so:

os:

- label: centos-stream-9

name: CentOS Stream 9

iso: CentOS-Stream-9-latest-x86_64-dvd1.iso

initrd_file: images/pxeboot/initrd.img

vmlinuz_file: images/pxeboot/vmlinuz

...

- label: linuxmint-21.3-cinnamon

name: Linux Mint 21.3 Cinnamon

iso: linuxmint-21.3-cinnamon-64bit.iso

initrd_file: casper/initrd.lz

vmlinuz_file: casper/vmlinuz

...

This means if I need to access the OS for a given machine, I'll have to loop through this list rather than just jumping to the one I want. I need to rework this into a dictionary so that I can address the OS record of a host as {{bis.os["centos-stream-9"]}}. So I need to change the template that generates the default grub.cfg to use this and maybe the other places. Baby's first refactor. This is what it should look like:

os:

"centos-stream-9":

label: centos-stream-9

name: CentOS Stream 9

iso: CentOS-Stream-9-latest-x86_64-dvd1.iso

initrd_file: images/pxeboot/initrd.img

vmlinuz_file: images/pxeboot/vmlinuz

...

"linuxmint-21.3-cinnamon":

label: linuxmint-21.3-cinnamon

name: Linux Mint 21.3 Cinnamon

iso: linuxmint-21.3-cinnamon-64bit.iso

initrd_file: casper/initrd.lz

vmlinuz_file: casper/vmlinuz

...

I am repeating the label inside thye record so that I can pass the entire record to a role and address it as: {{item.label}}.

Currently the documents that refer to bis.os are:

- ansible/roles/bis/tasks/main.yml

- ansible/templates/efi_grub.cfg.j2

- docs/designs/Ansible.md

And of course, now, the home page. Maybe I'll find some time to do that today.

2024-07-18 06:20 Bit rot already...

It's one of those things. One of the big rules in IT. Store information in only one place. Becauee as soon as you store the same information in two places, those places will go out of sync. Update one and you need to update the other. Even in something as mundane and simple as a set of Markdown files! So today i will mostly be reading my documents and checking them for doubles and inconsistencies.

2024-07-17 06:22 In the train in the train...

I'm being forced into the office. This means I have to get up around 5am, spend an hour in transport, and then sit at a desk all day. Monday was a complete washout for human contact, nobody I knew was there. Yesterday, I was sitting next to one colleague but since we don't really do the same things, we hardly spoke a word. Today, a few more people are expected to be in. Let's see how that works out. This is a complete and utter waste of time and money!

But it does give me the chance to update my NSCHOOL docs under way. The reorganisation of my Git repo takes the strain out of bringing all my stuff on the road. So what's next? I've written up my group design which is pretty simple. So now I need to do work on my workstation installation scripts. I already have a library of Ansible playbooks for making physical and virtual machines, so I need to adapt those to CentOS 9. Let's see... I think I'll document it in my BIS design

TO DO: Bring the BIS braindump directory structure in sync with what is on the machine...

2024-07-15 12:23 Download all the things!

So I now have a main server that can support clients - IP address management with DNS and DHCP, authentication with FreeIPA, web services with Apache and so on. I have also got the manual installations to work. So now I need to start installing things automatically, using Kickstart or the Debianoid equivalent. I will continue the current setup in that Kickstart is only used to make the machine Ansibleable using SSH. From there, everything is done by Ansible. I will probably have lots of VMs running CentOS 8, as well as CentOS 9. So those repositories at least I want to have locally mirrored using Reposync. I'll reinstate the cron job that downloads the latest updates. I do want to start supporting Rocky Linux, so maybe I'll mirror that one as well. Earlier CentOS versions, Fedora, Debian, I am not that fussed about. My laptops work wonderfully with Linux Mint, but I don't anticipate reinstalling them all that often. Laptops will likely devolve into pets at some point. So they can be installed from the Internets.